We recently worked with a USA-based e-learning company on an application modernization project. This will give you an idea of how to employ serverless architecture on an application modernization project.

LMS Modernization

When Company X partnered with CloudNow to modernize their Learning Management System (LMS) application, a few key points stood out.

- A wide variation in the number of concurrent users on the student-facing side of the application was expected

- On the other hand, the administrative side of the application was likely to see fairly steady usage levels

- They were happy to plan to remain on a single cloud platform – in this case, Microsoft Azure – for the long term

Serverless LMS – The Approach

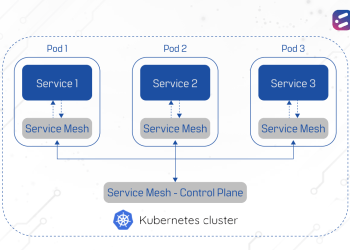

Based on these inputs, CloudNow architected the system as a blend of Kubernetes and Serverless architecture to deploy the microservices of the application.

The admin section, where managing server load in real time was not a major concern, deploying the application using Kubernetes made the best sense in terms of flexibility and cost.

On the other hand, for the student-facing application, the load was likely to vary greatly – from a few thousand to hundreds of thousands of students accessing the application concurrently. Using Kubernetes in such a scenario would require investing in a large number of Kubernetes pods to cater to the highest expected traffic – meaning this approach would be inefficient in terms of cost management. Additionally, as the traffic levels fluctuate, server resources would also need to be scaled up or down in real-time to ensure optimization of performance and cost.

With these considerations in mind, CloudNow and Company X decided to take the path of serverless architecture for the student app.

Benefits and Challenges of serverless architecture

There were several major benefits to this approach. With the use of Azure Functions on Company X’s Azure platform, management of resources is no longer a challenge. Azure manages deployment and resource allocation automatically spins up (or down) VMs as required, and handles scale up or down of VM resources.

The use of serverless architecture with Azure Functions did present one challenge, however. Each Azure Function generates an individual URL on deployment. Considering that each Azure Function essentially represents a microservice for specific functionality, each feature of the application would therefore have its own URL. This is not ideal as a coding practice, and also from a security standpoint.

To address these concerns, CloudNow configured an API Gateway to sit on top of the Azure Functions. The API Gateway has only a single URL – it receives all requests, and internally routes the requests to the appropriate function. Additionally, each request is checked to confirm if it is coming from a legitimate user – this is done using a subscription key in the request header, which is validated by the API gateway.

One more challenge was the need for large files to be uploaded from the admin section of the application. Large file uploads are not a use case suited to serverless architecture, since they require high I/O and network resources. Therefore this functionality was deployed using Kubernetes rather than with an Azure Function.

The Deployment

Here’s a quick look at what the final application deployment looks like. As you can see, it is a hybrid of Kubernetes-based container orchestration and Azure Function-based serverless architecture.

At CloudNow, finding innovative solutions to real business problems through our expertise in modern technologies is what are all about. If you found our approach to this challenging project interesting, or if you are considering initiating your own application modernization project soon, let’s talk!